System Architecture#

This section provides detailed architectural documentation for the CCAT Data Center infrastructure.

Infrastructure Overview#

Components#

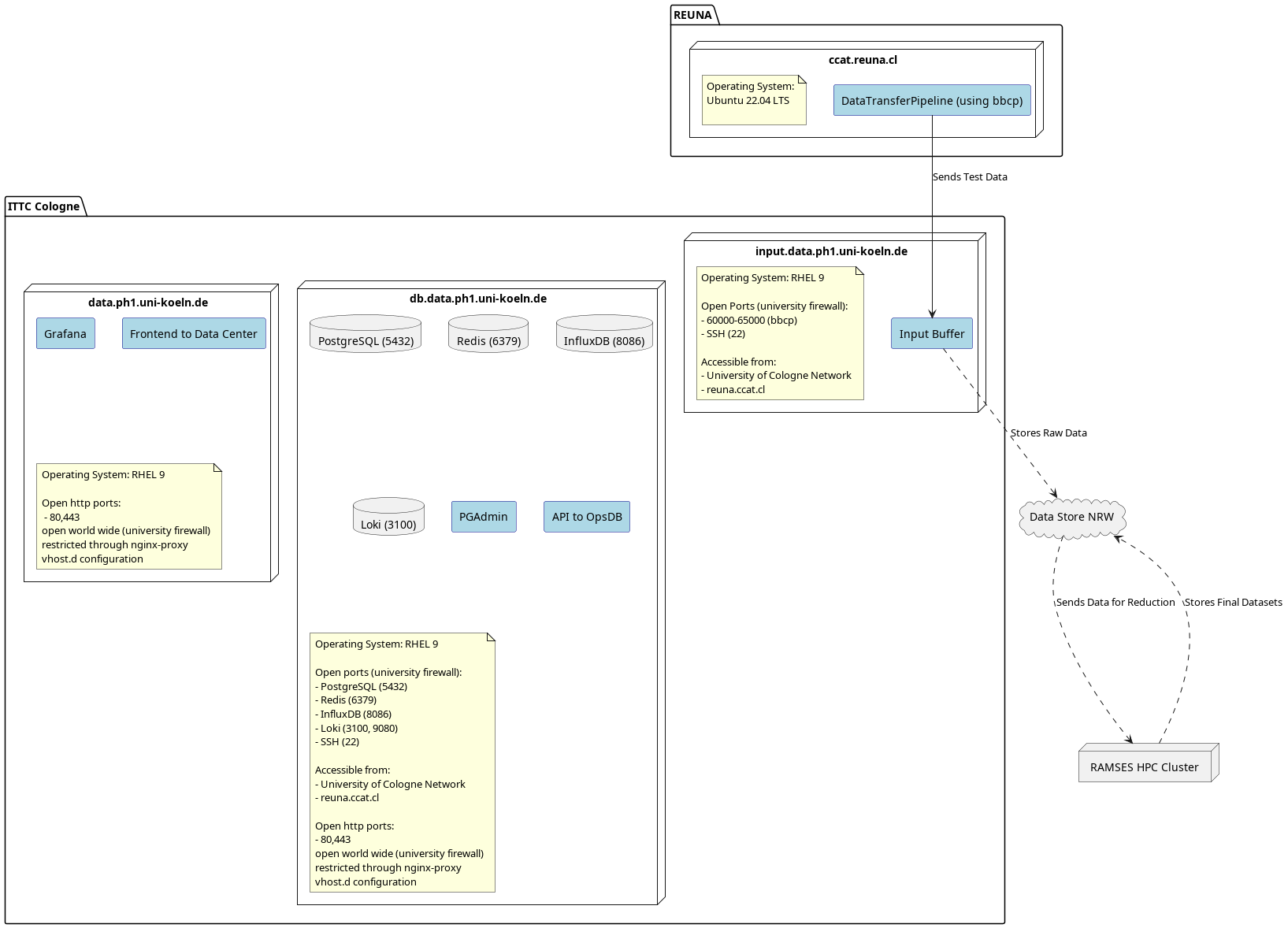

The CCAT Data Center consists of several integrated systems:

Data Management Layer

Operations Database - OpsDB - PostgreSQL database for observation metadata

Object Storage - DataStorage.NRW - S3 compatible object storage for raw and processed data files

Time-Series Database - InfluxDB for metrics and monitoring data

Logging Database - Loki for logging data

Transaction Buffering - Redis for transaction buffering and other caching needs

Application Layer

ops-db-api - RESTful API for data access and filing

ops-db-ui - Web interface for browsing and management

data-transfer - Automated data movement services

Infrastructure Layer

Container Platform - Docker and Kubernetes (on RAMSES)

Monitoring Stack - Monitoring & Observability - InfluxDB, Grafana, Loki, and Promtail for observability

Secrets Management - [Infisical](infisical_secrets_management) for credential management

Reverse Proxy - nginx for routing and SSL termination

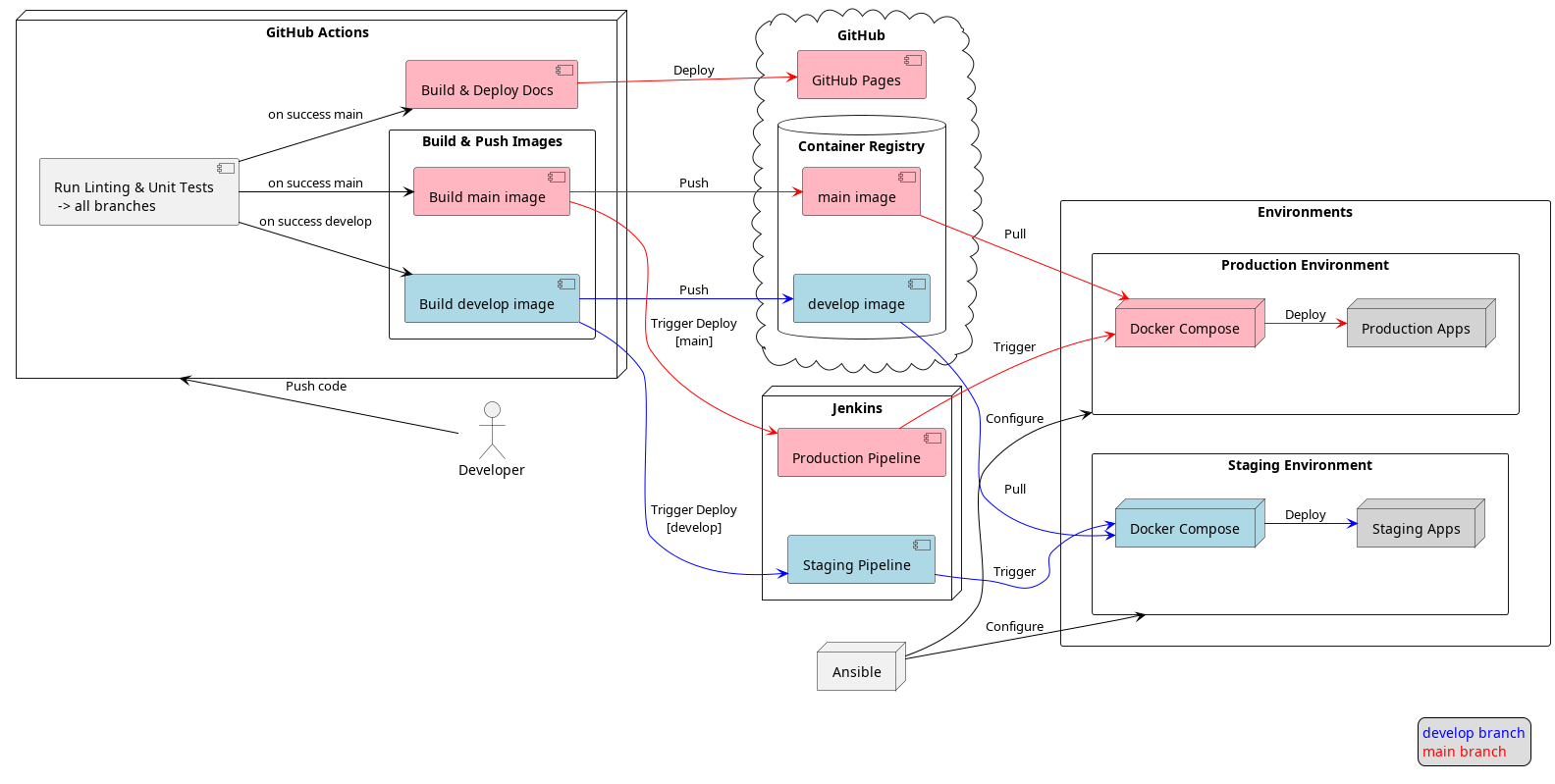

CI/CD - GitHub Actions for automated builds and Jenkins for deployment

Configuration Management - Ansible for configuration management

** Hardware and Virtual Machine Layer**

- Input Nodes - Nodes for receiving data from the telescope

3 R640 servers in Serverhall 2 (high availability)

Telescope Data Buffer Node - Nodes for storing data at the telescope and transmitting it to the input nodes

Staging System - Virtual Machines in the Cologne Data Center for staging data

RAMSES HPC Cluster - HPC cluster for processing data

S3 Object Store - S3 compatible object storage for raw and processed data files

For a detailed overview of the hardware infrastructure, please refer to the /operations/hardware/index section.

Network Architecture#

Note

Detailed network diagrams and configuration will be added here.

The data center is accessible from:

Deployment Topology#

The deployment uses github actions to build docker containers for the different services and push them to the container registry. Then a local Jenkins server is used to deploy the services to the different environments. The entire stack is deployed using docker compose. Here we chose not to use Kubernetes to avoid the complexity of managing a Kubernetes cluster and the additional overhead of managing the different Kubernetes resources.

The system supports multiple deployment configurations:

Production - High availability, multi-node Kubernetes cluster

Staging - Virtual Machines in the Cologne Data Center

Local Development - Docker Compose for developer workstations and laptops

The integration of all services is defined int he CCAT System Integration Documentation repository.